Introducing the First AI Model That Translates 100 Languages Without Relying on English

This article from Meta Platforms (Facebook) may be of interest to subscribers. Here is a section:

•Facebook AI is introducing M2M-100, the first multilingual machine translation (MMT) model that can translate between any pair of 100 languages without relying on English data. It’s open sourced here.

•When translating, say, Chinese to French, most English-centric multilingual models train on Chinese to English and English to French, because English training data is the most widely available. Our model directly trains on Chinese to French data to better preserve meaning. It outperforms English-centric systems by 10 points on the widely used BLEU metric for evaluating machine translations.

•M2M-100 is trained on a total of 2,200 language directions — or 10x more than previous best, English-centric multilingual models. Deploying M2M-100 will improve the quality of translations for billions of people, especially those that speak low-resource languages.

•This milestone is a culmination of years of Facebook AI’s foundational work in machine translation. Today, we’re sharing details on how we built a more diverse MMT training data set and model for 100 languages. We’re also releasing the model, training, and evaluation setup to help other researchers reproduce and further advance multilingual models.

The trend of artificial intelligence away from iterative learning to free associative learning is a major innovation which has also been used to by Google’s DeepMind to make substantial progress in a range of medical questions.

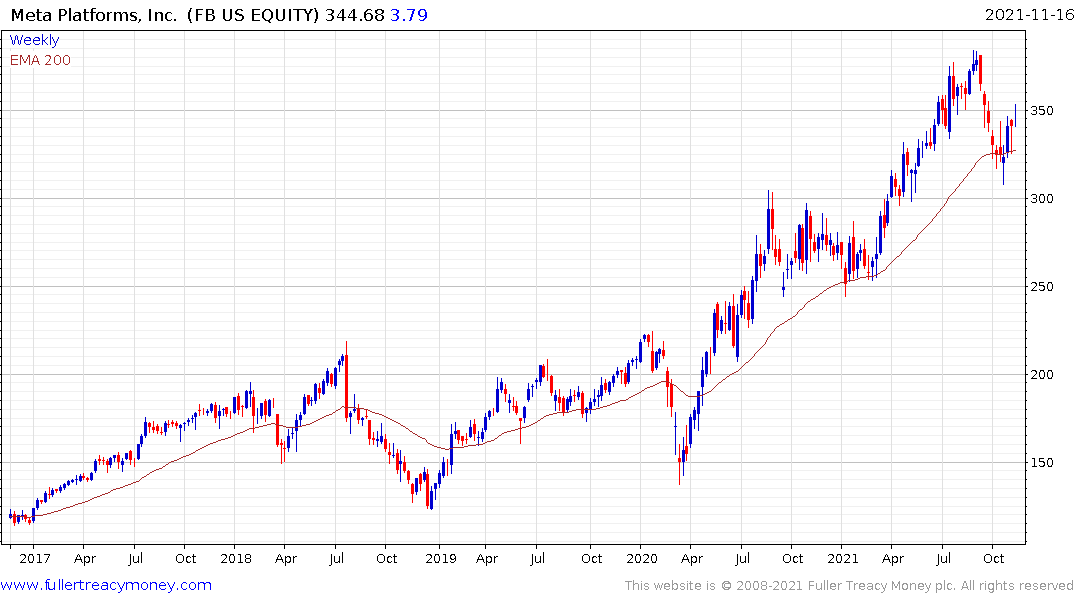

Facebook continues to rebound from the region of the trend mean.

Investment pitches often overstate the benefits of artificial intelligence, but progress is tangible in a number of fields. There is a massive shortage of translators for streaming services as they move into international markets. Automatic subtitling removes a roadblock for growth from companies like Netflix or Disney.

Netflix successfully broke out of its most recent range and continues to trend higher.

Disney broke downwards last week on lower streaming revenues. It will need to demonstrate support in the region of the trend mean if the benefit of the doubt is to be given to the upside.

Drug discovery and selecting candidates for additional study is also significantly improved by deep learning and artificial intelligence. Results don’t happen all at once but the pace at which they can be reached accelerates.

The challenge for investors at present is the biotech sector is dominated by vaccine producers. The Nasdaq Biotechnology Index’s largest constituents are AstraZeneca, Sanofi, Amgen, Moderna, Gilead Sciences, Regeneron Pharmaceuticals and Biontech SE. Together they represent 46.5% of the Index’s market cap.

The future of the healthcare sector lies beyond the near-term focus on a winner takes all approach to COVID vaccines. That’s is the primary reason the Index is struggling to perform at present.